User-Controllable Multi-Texture Synthesis with Generative Adversarial Networks

1Samsung AI Center Moscow2Skolkovo Institute of Science and Technology3National Research University Higher School of Economics4Samsung-HSE Laboratory, National Research University Higher School of Economics5Federal Research Center ”Computer Science and Control” of the Russian Academy of Sciences

arXiv 2019

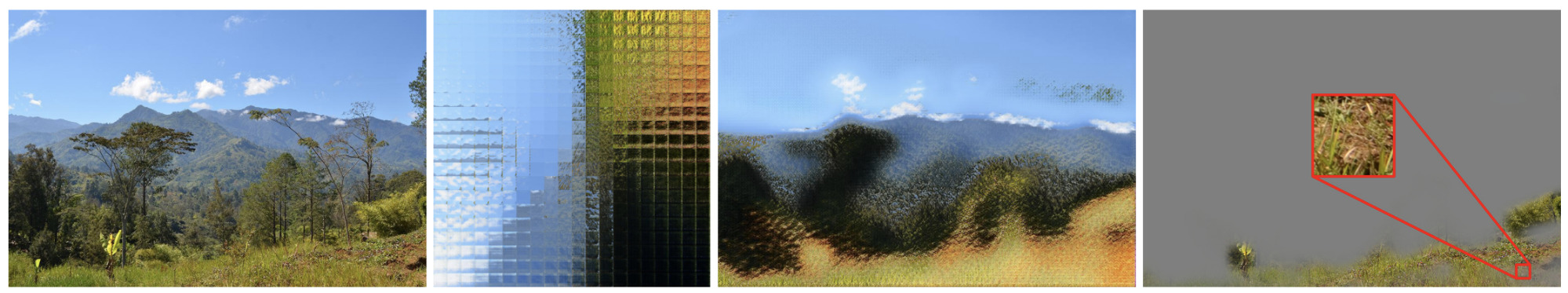

One can take 1) New Guinea 3264 × 4928 landscape photo, learn 2) a manifold of 2D texture embeddings for this photo, visualize 3) texture map for the image and perform 4) texture detection for a patch using distances between learned embeddings