Latent-Space Laplacian Pyramids for Adversarial Representation Learning with 3D Point Clouds

1Skolkovo Institute of Science and Technology2DeepReason.ai, Oxford, UK3State Key Lab, Zhejiang University, China+4IMPA, Brazil

International Conference on Computer Vision Theory and Applications 2020

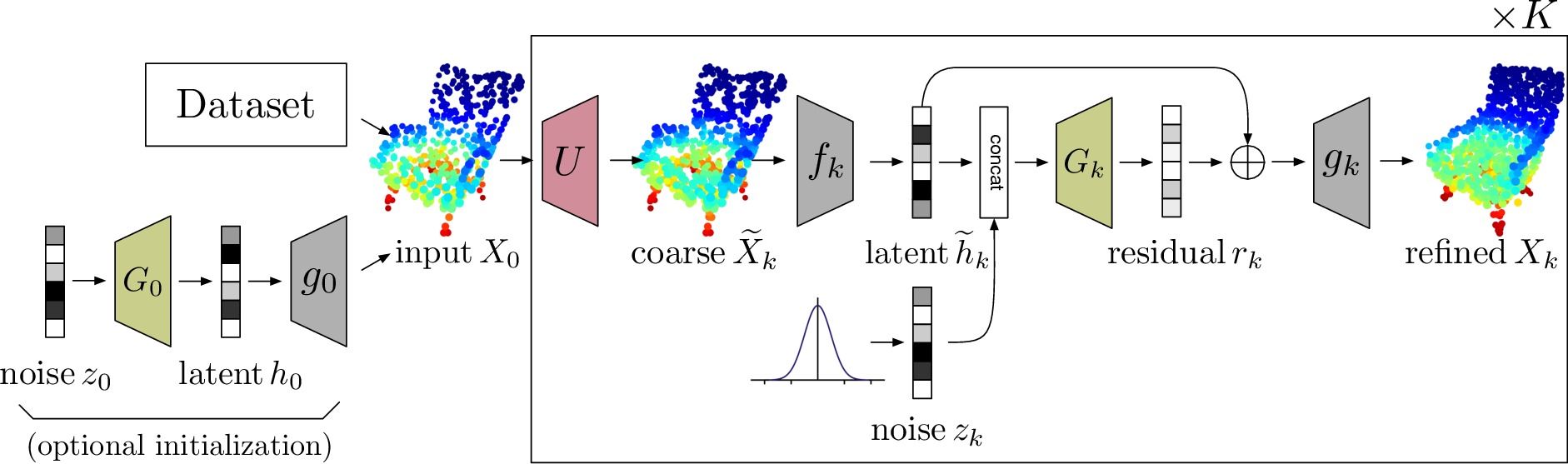

Full architecture of LSLP-GAN model. The network either accepts or generates an initial point cloud X0 and processes it with a series of K learnable steps. Each step (1) upsamples its input using a non-learnable operator U, (2) encodes the upsampled version into the latent space by fk, (3) performs correction of the latent code via a conditional GAN Gk, and (4) decodes the corrected latent code using gk.

Abstract

Constructing high-quality generative models for 3D shapes is a fundamental task in computer vision with diverse applications in geometry processing, engineering, and design. Despite the recent progress in deep generative modelling, synthesis of finely detailed 3D surfaces, such as high-resolution point clouds, from scratch has not been achieved with existing approaches. In this work, we propose to employ the latent-space Laplacian pyramid representation within a hierarchical generative model for 3D point clouds. We combine the recently proposed latent-space GAN and Laplacian GAN architectures to form a multi-scale model capable of generating 3D point clouds at increasing levels of detail. Our evaluation demonstrates that our model outperforms the existing generative models for 3D point clouds.Materials

Contact

If you have any questions about this work, please contact us under adase-3ddl@skoltech.ru.